If you use voice commands regularly, then you’re no doubt impressed with the progress “OK Google” has made over the past few years. This is in no small way thanks to Google’s neural network research, and now Google is making the fruits of their labors available to developers. This Thursday they announced that they are making SyntaxNet and its trained English parser Parsey McParseface available to anyone who wants to use them.

If you use voice commands regularly, then you’re no doubt impressed with the progress “OK Google” has made over the past few years. This is in no small way thanks to Google’s neural network research, and now Google is making the fruits of their labors available to developers. This Thursday they announced that they are making SyntaxNet and its trained English parser Parsey McParseface available to anyone who wants to use them.

SyntaxNet is a neural network framework that serves as a foundation for Natural Language Understanding systems. With this open source code, anyone can now take these powerful language models and put them to work for their own needs. Parsey McParseface serves as an example of what SyntaxNet can do, and Google reports that given grammatically correct English, Parsey McParseface can accurately interpret dependencies between words in sentences with 94 percent accuracy.

See also: Here’s how Google created the new voice of Search12

See also: Here’s how Google created the new voice of Search12

This software’s unusual name is a reference to the recent phenomenon during which Britain’s Natural Environment Research Council polled the internet to name their newest research vessel. The winner by a mile, “Boaty McBoatface,” was shot down by U.K. Science Minister Jo Johnson, who insisted that the vehicle should have a more “suitable” name. Nevertheless, the spirit of Boaty McBoatface lives on in the world’s most accurate language parsing software. “We were having trouble thinking of a good name,” said a Google spokesperson in a statement, “and then someone said, ‘We could just call it Parsey McParseface!’ So… yup.”

Getting computers to understand human sentences fluidly is a daunting task, and since the future is likely going to see us engaging technology conversationally, it’s important for language parsers to interpret vocalized commands with an extremely high degree of accuracy. The problem is that human language actually has a ton of ambiguity built into it. Computers don’t particularly like ambiguity.

Humans do a remarkable job of dealing with ambiguity, almost to the point where the problem is unnoticeable; the challenge is for computers to do the same. Multiple ambiguities such as these in longer sentences conspire to give a combinatorial explosion in the number of possible structures for a sentence. Usually the vast majority of these structures are wildly implausible, but are nevertheless possible and must be somehow discarded by a parser.

Having this software out in the hands of devs is good for both future apps and Google’s software, as SyntaxNet will only become more powerful the more it is used in different contexts. In their release statement, Google notes how crucial it is to “tightly integrate learning and search” as part of the neural network’s continued training.

What are your thoughts regarding Google’s efforts to make the future tech-conversational? Prefer to stick with your touchscreen, or are you looking forward to bossing your computer around from across the room? Let us know in the comments below!

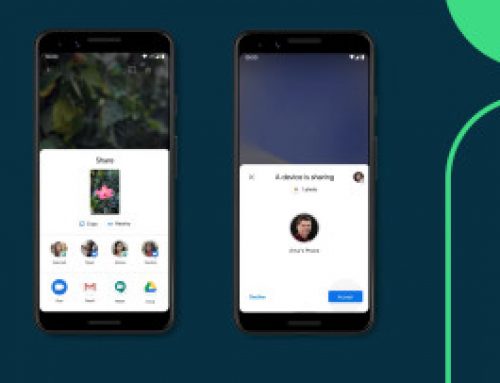

Next: Google Voice Access lets you control your smartphone entirely hands-free

Leave A Comment